Over the past 4 years I’ve had the opportunity to look more closely at the role of ICTs in Monitoring and Evaluation practice (and the privilege of working with Michael Bamberger and Nancy MacPherson in this area). When we started out, we wanted to better understand how evaluators were using ICTs in general, how organizations were using ICTs internally for monitoring, and what was happening overall in the space. A few years into that work we published the Emerging Opportunities paper that aimed to be somewhat of a landscape document or base report upon which to build additional explorations.

As a result of this work, in late April I had the pleasure of talking with the OECD-DAC Evaluation Network about the use of ICTs in Evaluation. I drew from a new paper on The Role of New ICTs in Equity-Focused Evaluation: Opportunities and Challenges that Michael, Veronica Olazabal and I developed for the Evaluation Journal. The core points of the talk are below.

*****

In the past two decades there have been 3 main explosions that impact on M&E: a device explosion (mobiles, tablets, laptops, sensors, dashboards, satellite maps, Internet of Things, etc.); a social media explosion (digital photos, online ratings, blogs, Twitter, Facebook, discussion forums, What’sApp groups, co-creation and collaboration platforms, and more); and a data explosion (big data, real-time data, data science and analytics moving into the field of development, capacity to process huge data sets, etc.). This new ecosystem is something that M&E practitioners should be tapping into and understanding.

In addition to these ‘explosions,’ there’s been a growing emphasis on documentation of the use of ICTs in Evaluation alongside a greater thirst for understanding how, when, where and why to use ICTs for M&E. We’ve held / attended large gatherings on ICTs and Monitoring, Evaluation, Research and Learning (MERL Tech). And in the past year or two, it seems the development and humanitarian fields can’t stop talking about the potential of “data” – small data, big data, inclusive data, real-time data for the SDGs, etc. and the possible roles for ICT in collecting, analyzing, visualizing, and sharing that data.

The field has advanced in many ways. But as the tools and approaches develop and shift, so do our understandings of the challenges. Concern around more data and “open data” and the inherent privacy risks have caught up with the enthusiasm about the possibilities of new technologies in this space. Likewise, there is more in-depth discussion about methodological challenges, bias and unintended consequences when new ICT tools are used in Evaluation.

Why should evaluators care about ICT?

There are 2 core reasons that evaluators should care about ICTs. Reason number one is practical. ICTs help address real world challenges in M&E: insufficient time, insufficient resources and poor quality data. And let’s be honest – ICTs are not going away, and evaluators need to accept that reality at a practical level as well.

Reason number two is both professional and personal. If evaluators want to stay abreast of their field, they need to be aware of ICTs. If they want to improve evaluation practice and influence better development, they need to know if, where, how and why ICTs may (or may not) be of use. Evaluation commissioners need to have the skills and capacities to know which new ICT-enabled approaches are appropriate for the type of evaluation they are soliciting and whether the methods being proposed are going to lead to quality evaluations and useful learnings. One trick to using ICTs in M&E is understanding who has access to what tools, devices and platforms already, and what kind of information or data is needed to answer what kinds of questions or to communicate which kinds of information. There is quite a science to this and one size does not fit all. Evaluators, because of their critical thinking skills and social science backgrounds, are very well placed to take a more critical view of the role of ICTs in Evaluation and in the worlds of aid and development overall and help temper expectations with reality.

Though ICTs are being used along all phases of the program cycle (research/diagnosis and consultation, design and planning, implementation and monitoring, evaluation, reporting/sharing/learning) there is plenty of hype in this space.

There is certainly a place for ICTs in M&E, if introduced with caution and clear analysis about where, when and why they are appropriate and useful, and evaluators are well-placed to take a lead in identifying and trailing what ICTs can offer to evaluation. If they don’t, others are going to do it for them!

Promising areas

There are four key areas (I’ll save the nuance for another time…) where I see a lot of promise for ICTs in Evaluation:

1. Data collection. Here I’d divide it into 3 kinds of data collection and note that the latter two normally also provide ‘real time’ data:

- Structured data gathering – where enumerators or evaluators go out with mobile devices to collect specific types of data (whether quantitative or qualitative).

- Decentralized data gathering – where the focus is on self-reporting or ‘feedback’ from program participants or research subjects.

- Data ‘harvesting’ – where data is gathered from existing online sources like social media sites, What’sApp groups, etc.

- Real-time data – which aims to provide data in a much shorter time frame, normally as monitoring, but these data sets may be useful for evaluators as well.

2. New and mixed methods. These are areas that Michael Bamberger has been looking at quite closely. New ICT tools and data sources can contribute to more traditional methods. But triangulation still matters.

- Improving construct validity – enabling a greater number of data sources at various levels that can contribute to better understanding of multi-dimensional indicators (for example, looking at changes in the volume of withdrawals from ATMs, records of electronic purchases of agricultural inputs, satellite images showing lorries traveling to and from markets, and the frequency of Tweets that contain the words hunger or sickness).

- Evaluating complex development programs – tracking complex and non-linear causal paths and implementation processes by combining multiple data sources and types (for example, participant feedback plus structured qualitative and quantitative data, big data sets/records, census data, social media trends and input from remote sensors).

- Mixed methods approaches and triangulation – using traditional and new data sources (for example, using real-time data visualization to provide clues on where additional focus group discussions might need to be done to better understand the situation or improve data interpretation).

- Capturing wide-scale behavior change – using social media data harvesting and sentiment analysis to better understand wide-spread, wide-scale changes in perceptions, attitudes, stated behaviors and analyzing changes in these.

- Combining big data and real-time data – these emerging approaches may become valuable for identifying potential problems and emergencies that need further exploration using traditional M&E approaches.

3. Data Analysis and Visualization. This is an area that is less advanced than the data collection area – often it seems we’re collecting more and more data but still not really using it! Some interesting things here include:

- Big data and data science approaches – there’s a growing body of work exploring how to use predictive analytics to help define what programs might work best in which contexts and with which kinds of people — (how this connects to evaluation is still being worked out, and there are lots of ethical aspects to think about here too — most of us don’t like the idea of predictive policing, and in some ways you could end up in a situation that is not quite what was aimed at.) With big data, you’ll often have a hypothesis and you’ll go looking for patterns in huge data sets. Whereas with evaluation you normally have particular questions and you design a methodology to answer them — it’s interesting to think about how these two approaches are going to combine.

- Data Dashboards – these are becoming very popular as people try to work out how to do a better job of using the data that is coming into their organizations for decision making. There are some efforts at pulling data from community level all the way up to UN representatives, for example, the global level consultations that were done for the SDGs or using “near real-time data” to share with board members. Other efforts are more focused on providing frontline managers with tools to better tweak their programs during implementation.

- Meta-evaluation – some organizations are working on ways to better draw conclusions from what we are learning from evaluation around the world and to better visualize these conclusions to inform investments and decision-making.

4. Equity-focused Evaluation. As digital devices and tools become more widespread, there is hope that they can enable greater inclusion and broader voice and participation in the development process. There are still huge gaps however — in some parts of the world 23% less women have access to mobile phones — and when you talk about Internet access the gap is much much bigger. But there are cases where greater participation in evaluation processes is being sought through mobile. When this is balanced with other methods to ensure that we’re not excluding the very poorest or those without access to a mobile phone, it can help to broaden out the pool of voices we are hearing from. Some examples are:

- Equity-focused evaluation / participatory evaluation methods – some evaluators are seeking to incorporate more real-time (or near real-time) feedback loops where participants provide direct feedback via SMS or voice recordings.

- Using mobile to directly access participants through mobile-based surveys.

- Enhancing data visualization for returning results back to the community and supporting community participation in data interpretation and decision-making.

Challenges

Alongside all the potential, of course there are also challenges. I’d divide these into 3 main areas:

1. Operational/institutional

Some of the biggest challenges to improving the use of ICTs in evaluation are institutional or related to institutional change processes. In focus groups I’ve done with different evaluators in different regions, this was emphasized as a huge issue. Specifically:

- Potentially heavy up-front investment costs, training efforts, and/or maintenance costs if adopting/designing a new system at wide scale.

- Tech or tool-driven M&E processes – often these are also donor driven. This happens because tech is perceived as cheaper, easier, at scale, objective. It also happens because people and management are under a lot of pressure to “be innovative.” Sometimes this ends up leading to an over-reliance on digital data and remote data collection and time spent developing tools and looking at data sets on a laptop rather than spending time ‘on the ground’ to observe and engage with local organizations and populations.

- Little attention to institutional change processes, organizational readiness, and the capacity needed to incorporate new ICT tools, platforms, systems and processes.

- Bureaucracy levels may mean that decisions happen far from the ground, and there is little capacity to make quick decisions, even if real-time data is available or the data and analysis are provided frequently to decision-makers sitting at a headquarters or to local staff who do not have decision-making power in their own hands and must wait on orders from on high to adapt or change their program approaches and methods.

- Swinging too far towards digital due to a lack of awareness that digital most often needs to be combined with human. Digital technology always works better when combined with human interventions (such as visits to prepare folks for using the technology, making sure that gatekeepers; e.g., a husband or mother-in-law is on-board in the case of women). A main message from the World Bank 2016 World Development Report “Digital Dividends” is that digital technology must always be combined with what the Bank calls “analog” (a.k.a. “human”) approaches.

B) Methodological

Some of the areas that Michael and I have been looking at relate to how the introduction of ICTs could address issues of bias, rigor, and validity — yet how, at the same time, ICT-heavy methods may actually just change the nature of those issues or create new issues, as noted below:

- Selection and sample bias – you may be reaching more people, but you’re still going to be leaving some people out. Who is left out of mobile phone or ICT access/use? Typical respondents are male, educated, urban. How representative are these respondents of all ICT users and of the total target population?

- Data quality and rigor – you may have an over-reliance on self-reporting via mobile surveys; lack of quality control ‘on the ground’ because it’s all being done remotely; enumerators may game the system if there is no personal supervision; there may be errors and bias in algorithms and logic in big data sets or analysis because of non-representative data or hidden assumptions.

- Validity challenges – if there is a push to use a specific ICT-enabled evaluation method or tool without it being the right one, the design of the evaluation may not pass the validity challenge.

- Fallacy of large numbers (in cases of national level self-reporting/surveying) — you may think that because a lot of people said something that it’s more valid, but you might just be reinforcing the viewpoints of a particular group. This has been shown clearly in research by the World Bank on public participation processes that use ICTs.

- ICTs often favor extractive processes that do not involve local people and local organizations or provide benefit to participants/local agencies — data is gathered and sent ‘up the chain’ rather than shared or analyzed in a participatory way with local people or organizations. Not only is this disempowering, it may impact on data quality if people don’t see any point in providing it as it is not seen to be of any benefit.

- There’s often a failure to identify unintended consequences or biases arising from use of ICTs in evaluation — What happens when you introduce tablets for data collection? What happens when you collect GPS information on your beneficiaries? What risks might you be introducing or how might people react to you when you are carrying around some kind of device?

C) Ethical and Legal

This is an area that I’m very interested in — especially as some donors have started asking for the raw data sets from any research, studies or evaluations that they are funding, and when these kinds of data sets are ‘opened’ there are all sorts of ramifications. There is quite a lot of heated discussion happening here. I was happy to see that DFID has just conducted a review of ethics in evaluation. Some of the core issues include:

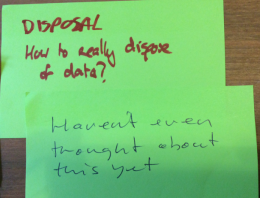

- Changing nature of privacy risks – issues here include privacy and protection of data; changing informed consent needs for digital data/open data; new risks of data leaks; and lack of institutional policies with regard to digital data.

- Data rights and ownership: Here there are some issues with proprietary data sets, data ownership when there are public-private partnerships, the idea of data philanthropy’ when it’s not clear whose data is being donated, personal data ‘for the public good’, open data/open evaluation/ transparency, poor care taken when vulnerable people provide personally identifiable information; household data sets ending up in the hands of those who might abuse them, the increasing impossibility of data anonymization given that crossing data sets often means that re-identification is easier than imagined.

- Moving decisions and interpretation of data away from ‘the ground’ and upwards to the head office/the donor.

- Little funding for trialing/testing the validity of new approaches that use ICTs and documenting what is working/not working/where/why/how to develop good practice for new ICTs in evaluation approaches.

Recommendations: 12 tips for better use of ICTs in M&E

Despite the rapid changes in the field in the 2 years since we first wrote our initial paper on ICTs in M&E, most of our tips for doing it better still hold true.

- Start with a high-quality M&E plan (not with the tech).

- But also learn about the new tech-related possibilities that are out there so that you’re not missing out on something useful!

- Ensure design validity.

- Determine whether and how new ICTs can add value to your M&E plan.

- It can be useful to bring in a trusted tech expert in this early phase so that you can find out if what you’re thinking is possible and affordable – but don’t let them talk you into something that’s not right for the evaluation purpose and design.

- Select or assemble the right combination of ICT and M&E tools.

- You may find one off the shelf, or you may need to adapt or build one. This is a really tough decision, which can take a very long time if you’re not careful!

- Adapt and test the process with different audiences and stakeholders.

- Be aware of different levels of access and inclusion.

- Understand motivation to participate, incentivize in careful ways.

- This includes motivation for both program participants and for organizations where a new tech-enabled tool/process might be resisted.

- Review/ensure privacy and protection measures, risk analysis.

- Try to identify unintended consequences of using ICTs in the evaluation.

- Build in ways for the ICT-enabled evaluation process to strengthen local capacity.

- Measure what matters – not what a cool ICT tool allows you to measure.

- Use and share the evaluation learnings effectively, including through social media.

ything else. We often start from the point of collection when really it’s at the design stage when we should think about the burden of data collection and define what’s the minimum we can ask of people? How we design – even how we get consent – can inform how the whole process happens.

ything else. We often start from the point of collection when really it’s at the design stage when we should think about the burden of data collection and define what’s the minimum we can ask of people? How we design – even how we get consent – can inform how the whole process happens. It’s difficult to dispose of data when there are multiple versions of it and a data footprint.

It’s difficult to dispose of data when there are multiple versions of it and a data footprint.